Visual Reactive Programming – Bonsai is a Cajal NeuroKit. NeuroKits are hybrid courses that combine online lectures about fundamentals and advanced neuroscience topics, with hands-on and physical experiments.

Researchers from all over the world can participate thanks to the course material sent by post in a kit box containing all the tools needed to follow the online course.

Course overview

Modern neuroscience relies on the combination of multiple technologies to record precise measurements of neural activity and behaviour. Commercially available software for sampling and controlling data acquisition is often too expensive, closed to modification and incompatible with this growing complexity, requiring experimenters to constantly patch together diverse pieces of software.

This course will introduce the basics of the Bonsai programming language, a high-performance, easy to use, and flexible visual environment for designing closed-loop neuroscience experiments combining physiology and behaviour data.

This language has allowed scientists with no previous programming experience to quickly develop and scale-up experimental rigs, and can be used to integrate new open-source hardware and software.

Course Teaser

What will you learn?

By the end of the course you will be able to use Bonsai to:

– create data acquisition and processing pipelines for video and visual stimulation.

– control behavioral task states and run your closed-loop experiments.

– collect data from cameras, microphones, Arduino boards, electrophysiology devices, etc.

– achieve precise synchronization of independent data streams.

The online material will be soon found here.

Faculty

Gonçalo Lopes

Course Director

NeuroGEARS, London, UK

Instructors

João Frazão – Champalimaud Research, Lisbon, PT

Niccolò Bonacchi – International Brain Laboratory, Lisbon, PT

Nicholas Guilbeault – University of Toronto, CA

André Almeida – NeuroGEARS, London, UK

Bruno Cruz – Champalimaud Research, Lisbon, PT

Programme

Day 1 – Introduction to Bonsai

-

Introduction to Bonsai. What is visual reactive programming.

-

How to measure almost anything with Bonsai (from quantities to bytes).

-

How to control almost anything with Bonsai (from bytes to effects).

-

How to measure/control multiple things at the same time with one computer.

-

Demos and applications: a whirlwind tour of Bonsai.

Day 2 – Cameras, tracking, controllers

-

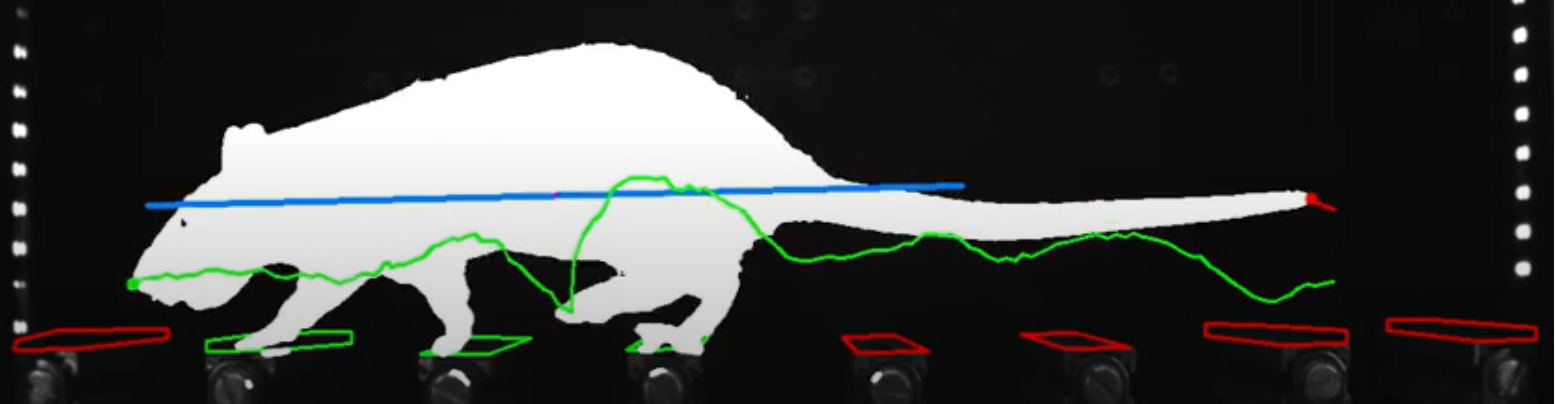

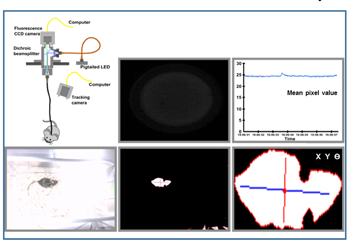

Measuring behavior using a video.

-

Recording real-time video from multiple cameras.

-

Real-time tracking of colored objects, moving objects and contrasting objects.

-

Measuring behavior using voltages and Arduino.

-

Data synchronization. What frame did the light turn on?

Day 3 – Real-time closed-loop assays

-

What can we learn from closed-loop experiments?

-

Conditional effects. Triggering a stimulus based on video activity.

-

Continuous feedback. Modulate stimulus intensity with speed or distance.

-

Feedback stabilization. Record video centered around a moving object.

-

Measuring closed-loop latency.

Day 4 – Operant behavior tasks

-

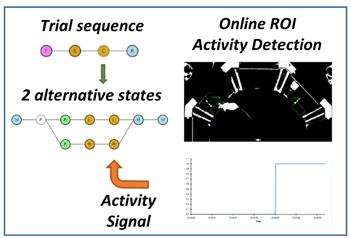

Modeling trial sequences: states, events, and side-effects.

-

Driving state transitions with external inputs.

-

Choice, timeouts and conditional logic: the basic building blocks of reaction time, Go/No-Go and 2AFC tasks.

-

Combining real-time and non real-time logic for good measure.

-

Student project brainstorming

Day 5 – Visual stimulation and beyond

-

Interactive visual environments using BonVision.

-

Machine learning for markerless pose estimation using DeepLabCut.

-

Multi-animal tracking and body part feature extraction with BonZeb.

-

Student project presentation.

-

Where to next.

Registration

Fee : 300 € (includes lectures and kit)

Application closed on 20 December 2020.

You are welcome to express your interest in the next Cajal Bonsai NeuroKit. Click on the button in the top banner or here.

To receive more information about this NeuroKit, email info@cajal-training.org